Recently, some hacker organizations have turned their eyes to ransom attacks targeting certain products. As of last week, hacker organizations had taken control of and wiped data from at least 34,000 MongoDB databases, asking for a ransom to return the stolen files.

Overview

Then on January 18, 2017, hundreds of ElasticSearch servers were subject to the same type of attack, with data wiped. According to Niall Merrigan, a security researcher, by far, at least 2711 ElasticSearch servers have been attacked. Following these two attacks, hackers are now targeting Hadoop Distributed File System (HDFS) cluster installations. These ransom attacks have been launched using similar methods. Throughout the attack, no ransomware was used and no common vulnerabilities were exploited. Instead, insecure configurations of these products provided an opportunity for attackers to manipulate data effortlessly.

What Is Hadoop?

Apache Hadoop is an open-source software framework distributed under the Apache 2.0 license in support of distributed storage and processing of large data sets. Written in the Java programming language and boasting the capabilities of writing and executing distributed applications and processing massive data, Hadoop implements distributed computing of very large data sets on computer clusters. The Hadoop framework features two core modules: HDFS and MapReduce. The former is highly fault-tolerant and designed to be deployed on low-cost hardware. HDFS provides high throughput access to application data and is suitable for applications that have large data sets. MapReduce is a software framework for easily writing applications that process vast amounts of data (multi-terabyte data sets) in parallel on large clusters (thousands of nodes) of commodity hardware in a reliable, fault-tolerant manner.

Ransom Attack Pattern

Recent ransom attacks targeting MongoDB, ElasticSearch, and Hadoop take on similar forms. During attacks, no ransomware or common vulnerabilities were involved. Instead, insecure configurations of these products were exploited as a loophole. Take MongoDB as an example. Its attacked databases are directly exposed to the Internet without any authentication mechanisms. Once attackers log in to such Internet-facing databases, they can perform malicious operations such as deleting data. A similar method was employed for attacking ElasticSearch servers, which use port 9300 for TCP access and port 9200 for HTTP access by default. If these ports are exposed to the Internet without any protection, access to them does not require any identity authentication. Anyone having established a connection to such a port can perform arbitrary operations, say adding, deleting, or tampering with data on ElasticSearch servers through related APIs.

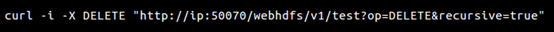

The success of the ransom attack targeting Hadoop can also be attributed to Internet-facing ports. Hadoop cluster users tend to directly open some ports, such as web port 50070 of HDFS, to the Internet for the sake of convenience or due to a lack of awareness about security. An attacker can run such commands as the following to manipulate data on machines:

The command listed here as an example can be used to recursively delete all contents in the test directory.

According to statistics of shodan.io, roughly 8300 Hadoop clusters in China have port 50070 exposed to the Internet, as shown in the following figure.

(Source: shodan.io)

Protection Measures

- Close unused ports. The following table lists default ports used by Hadoop.

| HDFS | NameNode: 50070 |

| SecondNameNode: 50090 | |

| DataNode: 50075 | |

| Backup/Checkpoint Node: 50105 | |

| YARN | ResourceManager: 8088 |

| JobTracker: 50030 | |

| TaskTracker: 50060 | |

| Hue | Hue: 8080 |

If you do not need to use any ports listed in the preceding table, you are advised to close them or configure a firewall policy to block packets to these ports.

- Enable Kerberos. Security authentication is lacking for Hadoop before 1.0.0/CDH3 (CDH is Cloudera’s commercial release of Hadoop upon modification of the source code). Hadoop 1.0.0/CDH3 and later have introduced the Kerberos authentication mechanism so that nodes in clusters can be properly used only after being authenticated with keys.

- Use NSFOCUS Web Vulnerability Scanning System (WVSS) to scan systems for insecure configurations. MongoDB, CouchDB, Sorl, ElasticSearch, and Hadoop do not require authentication if installed in default mode. WVSS can check whether an authentication mechanism is available for access to the preceding products.

About NSFOCUS

NSFOCUS IB is a wholly owned subsidiary of NSFOCUS, an enterprise application and network security provider, with operations in the Americas, Europe, the Middle East, Southeast Asia and Japan. NSFOCUS IB has a proven track record of combatting the increasingly complex cyber threat landscape through the construction and implementation of multi-layered defense systems. The company’s Intelligent Hybrid Security strategy utilizes both cloud and on-premises security platforms, built on a foundation of real-time global threat intelligence, to provide unified, multi-layer protection from advanced cyber threats.

For more information about NSFOCUS, please visit:

http://www.nsfocusglobal.com.

NSFOCUS, NSFOCUS IB, and NSFOCUS, INC. are trademarks or registered trademarks of NSFOCUS, Inc. All other names and trademarks are property of their respective firms.